AI TRAILBLAZERS MEETUP EVENT

@ Second Sky

INTRO

The magic of creative tools continues to draw in consumers. Fifty-two percent of the companies on the web list are focused on content generation or editing, across modalities — image, video, music, speech, and more. Of the 12 new entrants, 58% are in the creative tool space.

This included four of the top five first-time listmakers by rank:

Art is always mediated by the tools we have at our disposal for communicating, and the nature of those tools is determined by the technical capabilities of the time.

JSYK

DAW stands for Digital Audio Workstation, and VST stands for Virtual Studio Technology

A DAW is a tool used to produce music that includes features like a digital audio processor, MIDI sequencer, virtual instruments, and music notation. DAWs also often include effects processing features like delay, reverb, compression, and EQ. Some DAWs also offer project templates, version control, and folder hierarchies to help keep projects organized.

A VST is a plug-in format that can be used in a DAW to add additional functionality and sound processing to music production. VST plugins are typically either instruments (VSTi) or effects (VSTfx), but other categories exist. VST plugins often have a custom graphical user interface that displays controls similar to physical switches and knobs on audio hardware.

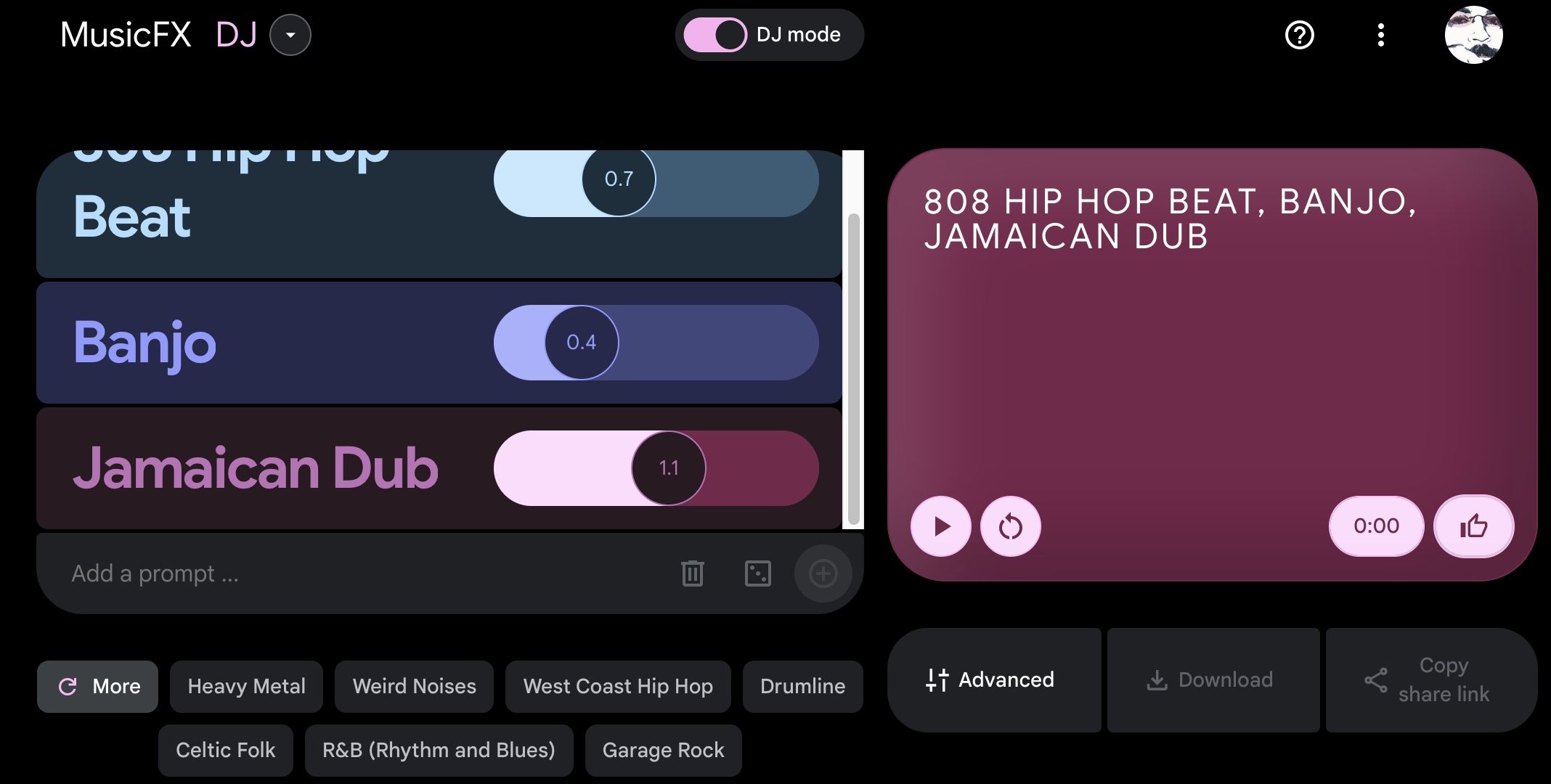

AI Generated Music Creation Tools with Text-to-Music Conversion

AI Generated Music Creation Tools with Text-to-Music Conversion

IBM Watson Beat is a revolutionary technology that uses artificial intelligence and cognitive computing to create music. The technology is designed to help musicians and artists create unique sounds and songs by analyzing vast amounts of data from various sources and different elements of music, such as tempo, melody, and chord progressions. It then uses this information to generate new music that is unique and original. The system can also learn from user feedback and improve its music-making abilities over time.

AIVA (Artificial Intelligence Virtual Artist) is another popular AI music software that has been used extensively by music composers and producers. It uses deep learning algorithms to generate original music tracks based on the user's inputs such as tempo, mood, and instruments. The software has a user-friendly interface and the tracks can be highly customized. AIVA is being used by music composers and producers who want to create high-quality music tracks quickly.

Beatoven.ai is an AI-powered music creation platform that allows users to easily create original music compositions without any prior knowledge of music theory or instruments. It analyzes music patterns and generate new melodies and beats that users can customize and arrange to create their own unique tracks.

An advantage of Beatoven.ai is its collaborative capabilities, which allow users to work together on projects in real-time. This is particularly useful for musicians and producers who want to collaborate on music projects remotely, as it eliminates the need for physical studio space and equipment.

Boomy is a music creation platform that allows users to create their own original songs in seconds. Boomy makes it possible for anyone to make music, regardless of their experience level. Users can choose from a wide range of genres, moods, and instruments to create a unique sound that reflects their individual style. And with the ability to customize the production and composition of each song, users can fine-tune their creations to their exact specifications.

But Boomy isn't just about making music – it's also about sharing it with the world. Users can submit their songs to streaming platforms and get paid when people listen. And with a global community of artists empowered by Boomy, users can connect with other like-minded musicians and collaborate on new projects.

One of the most exciting features is the ability to add vocals to a song. With the "Add Vocal" feature, users can choose to add custom vocals or try an experimental auto-vocal. Custom vocals allow users to sing, rap, or add a top-line to their song, while auto-vocal turns a short audio phrase or sample into an algorithmic vocal line.

Harmonai is a powerful music software designed to help musicians of all levels improve their understanding of music theory and composition. With its user-friendly interface and advanced features, Harmonai is an essential tool for anyone looking to enhance their musical skills and knowledge.

One of the key features of Harmonai is its chord progression generator. This tool allows musicians to quickly and easily create chord progressions for their songs, providing them with a solid foundation to build upon. The software also includes a range of different chord voicings and progressions, giving users the flexibility to create unique and personalized sounds.

Another useful feature of Harmonai is its scale generator. This tool allows musicians to explore different scales and modes, helping them to better understand the relationships between notes and chords. With Harmonai, users can experiment with different scales and modes, and even create their own custom scales to use in their compositions.

Harmonai also includes a range of other features, such as a note and chord library, a rhythm generator, and a melody generator. These tools help musicians to explore different musical ideas and develop their own unique style. Whether you're a beginner or an experienced musician, Harmonai is an invaluable tool for anyone looking to take their musical skills to the next level.

Magenta Studio is a MIDI plugin for Ableton Live. It contains 5 tools: Continue, Groove, Generate, Drumify, and Interpolate, which let you apply Magenta models on your MIDI clips from the Session View.

Musicfy.lol is an AI-powered music tool that turns text into songs. Just tell the AI what kind of a track you want to create and it will get the job done for you. Also, this tool allows you to create a custom voice that you can sing songs with. You can also clone popular artists’ voices to sing your songs.

OpenAI Jukebox can generate music in a wide range of styles and genres, using a combination of deep learning techniques that analyze and synthesize audio data.

It is based on two main components: the audio model and the autoregressive model. The audio model is trained on a dataset of raw audio samples and is responsible for generating the waveform of the music. The autoregressive model is used to generate the sequence of notes and rhythms that make up the music.

One of the most impressive features of the OpenAI Jukebox is its ability to generate music that is stylistically similar to existing songs or artists. By training the model on a large dataset of music, it can learn to recognize and replicate the patterns and characteristics of different genres and styles. This means that musicians and producers can use the technology to create new compositions that are similar in style to their favorite artists, without needing to have extensive knowledge of music theory or production techniques.

Another advantage is its potential to streamline the music production process. With traditional recording methods, producers need to spend a significant amount of time recording and editing audio tracks, which can be a time-consuming and expensive process. With the OpenAI Jukebox, however, producers can create high-quality music quickly and easily, without the need for extensive recording and production equipment. This technology has the potential to revolutionize the music industry by democratizing the music production process and enabling more people to create high-quality music.

Orb Producer is a cutting-edge artificial intelligence (AI) music composition software that is designed to assist music producers in creating professional-sounding compositions in a variety of genres.

The software utilizes a proprietary algorithm that analyzes the user's input and generates original musical ideas that can be used as the basis for complete compositions. Orb Producer also allows users to customize and manipulate the generated music in real-time, providing a high degree of flexibility and creative control.

Orb Producer is compatible with a wide range of digital audio workstations (DAWs) and virtual studio technology (VST) plugins, making it a versatile tool for any music production setup. It is also equipped with a variety of advanced features, such as chord progression generation, melody generation, and intelligent harmonization.

Riffusion generates music from text files.

This is the v1.5 stable diffusion model with no modifications, just fine-tuned on images of spectrograms paired with text. Audio processing happens downstream of the model. It can generate infinite variations of a prompt by varying the seed. All the same web UIs and techniques like img2img, inpainting, negative prompts, and interpolation work out of the box.

An audio spectrogram is a visual way to represent the frequency content of a sound clip. The x-axis represents time, and the y-axis represents frequency. The color of each pixel gives the amplitude of the audio at the frequency and time given by its row and column.

With diffusion models, it is possible to condition their creations not only on a text prompt but also on other images. This is incredibly useful for modifying sounds while preserving the structure of the original clip you like. You can control how much to deviate from the original clip and towards a new prompt using the denoising strength parameter.

As AI music becomes more accessible and popular, it has become the center of a cultural debate. AI creators defend the technology as a way to make music more accessible, while many music industry professionals and other critics accuse creators of copyright infringement and cultural appropriation.

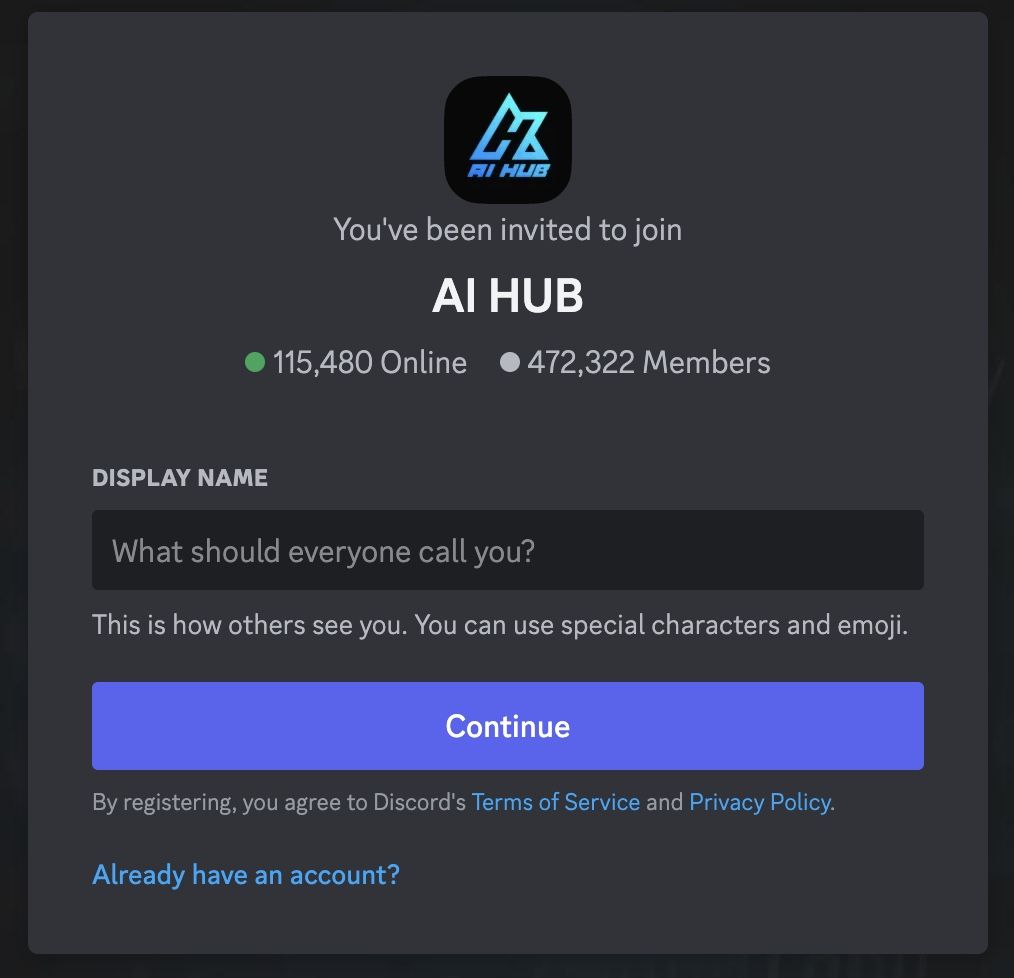

A Discord server called AI Hub was created on March 25, 2023 and hosts a large community of AI music creators behind some of the most viral AI songs. AI Hub is dedicated to making and sharing AI music and teaches people how to create songs, with guides and even ready-made AI models tailored to mimic specific artists’ voices available to new creators. People can post songs they make and ask troubleshooting questions to each other.

There are now many ways to transform a song’s vocals into a new voice. The original way was to run code on a Colab page that the mods of the server created. Then, someone created a Discord bot on another server called Sable AI Hub in which you can run the model using text commands. Now, there is also the first music AI creation app called Musicfy that allows users to directly import an audio file, choose an artist, and export the new vocals.

This app was made by a student hacker who goes by the online pseudonym ak24 and is also a member of the AI Hub community. The app saw over a hundred thousand uses a day after a day launching, he said. “This is going to be completely free. The way I’m thinking right now is creating a platform for people to create AI music of whatever they want.

UMG Executive Michael Nash also published an op-ed in February where he wrote that AI is “diluting the market, making original creations harder to find and violating artists’ legal rights to compensation from their work.” - February 2023

“People are deeply concerned by AI but many also acknowledge that AI as a tool is a good thing to increase workflow, navigate creative block and become more efficient,” Karl Fowlkes, an entertainment and business attorney, told Motherboard. - March 2023

“There are a lot of positives to AI in the music industry but generative AI is something that all stakeholders in the industry need to attack. UMG’s notice to [streaming platforms] was a major domino publicly”. - May 2023

Vocal Synthesis, AI Voice Cloning and Voice Enhancement

Vocal Synthesis, AI Voice Cloning and Voice Enhancement

Vocal Synthesizers (Synthesizer V)

Vocaloid

Altered Studio

Supertone

Voiceful / Voicemod

Kits.AI - AI vocal toolbox

Easily drag & drop into your DAW

Integrate Kits.AI directly into your favorite DAW or music production software with drag-and-drop functionality.

Lyria’s Symphony: AI Hits a High Note in Music Generation

The Dawn of Lyria: AI’s Melodic Masterpiece

Lyria represents a monumental step in AI’s capabilities in music generation. Unlike previous models, Lyria can adeptly handle the intricate layers of music – from beats and notes to vocal harmonies. This ability to maintain musical continuity across various segments makes it a game-changer.

Dream Track: The New Creative Playground

One of the most exciting applications of Lyria is the ‘Dream Track’ experiment on YouTube Shorts. This feature is designed to forge deeper connections between artists, creators, and fans. By allowing users to generate 30-second soundtracks with the AI-rendered voices and styles of popular artists, Dream Track opens a new realm of interactive and participative music creation.

Empowering Artists with Music AI Tools

DeepMind’s venture extends beyond Lyria. They’re developing a suite of Music AI tools, in collaboration with artists and producers, to augment the creative process. These tools are capable of transforming simple hums into complex tracks, signifying a new era where technology and human creativity merge seamlessly.

Pioneering Responsible Use with SynthID

In an effort to responsibly deploy these technologies, DeepMind introduces SynthID for watermarking AI-generated audio. This tool ensures the authenticity of content while maintaining the quality of the listening experience.

The Future of Music Creation

The advent of Lyria and related tools marks a new frontier in music production, offering artists unprecedented creative possibilities. As these technologies evolve, they promise to reshape the landscape of music creation, making it more accessible, diverse, and interactive.

Conclusion

DeepMind’s Lyria and the accompanying suite of tools represent not just a technological leap, but a new canvas for musical expression. As we embrace these advancements, the future of music seems more exciting and boundless than ever.

TL;DR: Google DeepMind’s new AI music generation model, Lyria, in partnership with YouTube, is a groundbreaking advancement in AI music creation. Along with the Dream Track experiment and Music AI tools, it’s set to transform music creation and interaction, backed by responsible deployment through SynthID watermarking.

Source Separation and Stem Splitting

Source Separation and Stem Splitting

Demucs AI

Demucs AI is a powerful artificial intelligence technology designed to separate individual elements of an audio mix. It can separate vocals, drums, bass, and other sounds from a mix, while preserving the original quality and clarity of the audio. The software is capable of identifying specific sounds within a mix, thanks to its advanced deep neural network-based algorithms.

Ultimate Vocal Remover

Ultimate Vocal Remover uses state-of-the-art source separation models to remove vocals from audio files

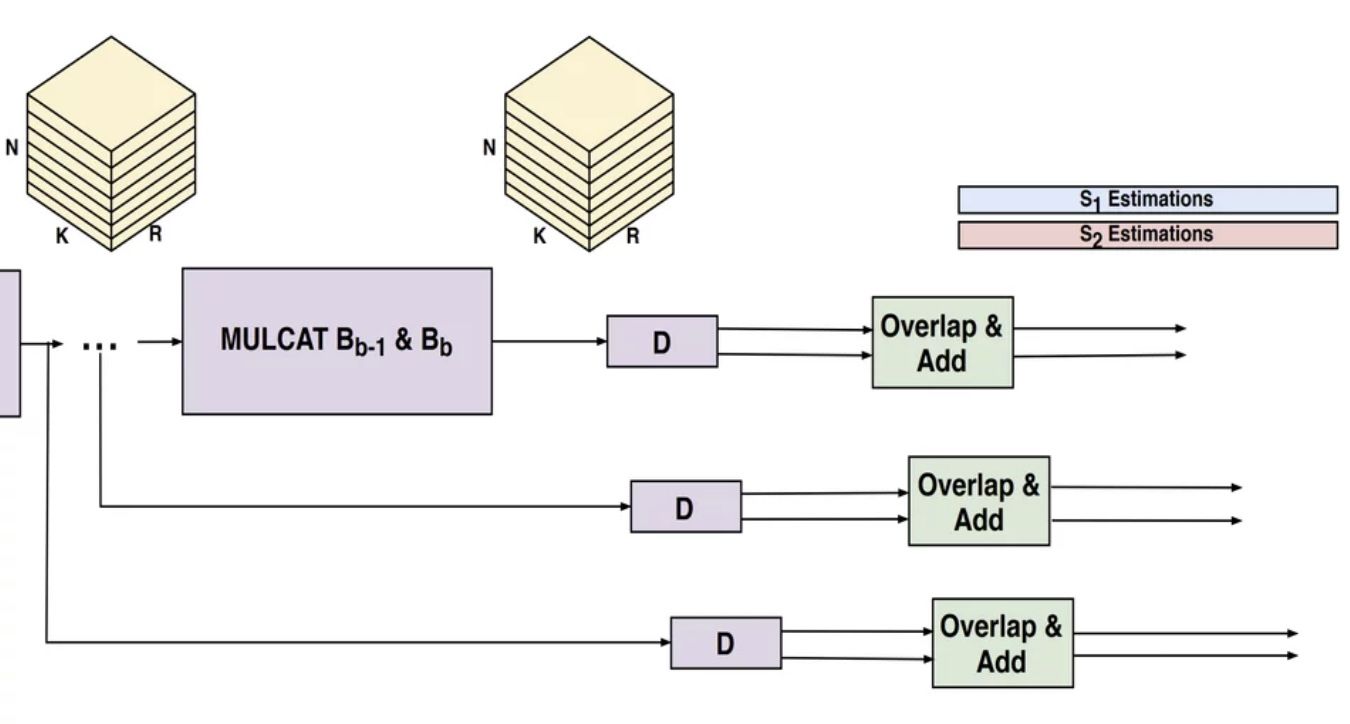

LALAL.AI

LALAL.AI uses precise stem extraction to remove vocal, instrumental, drums, bass, piano, electric guitar, acoustic guitar, and synthesizer tracks without quality loss.

Paint with Music

Pros, Cons and Limitations to using AI

Pros of AI in Music Production

Cost Efficiency and Accessibility

One of the more significant advantages of AI in music production is its cost-effectiveness. Traditional recording equipment can be prohibitively expensive, but AI music generators, which are software-based, present a more affordable alternative. This democratizes the music production process, allowing producers on a budget to achieve professional sounds without the hefty price tag.

Furthermore, royalty-free AI voices offer unique advantages in terms of cost, scalability, and ease of use compared to traditional voiceover services. For producers aiming to diversify their vocal tracks without relying on session singers, this can be a game-changing tool. With the rise of royalty-free AI voices, the barriers to entry for high-quality production are effectively lowered.

Enhancing Creativity and Output

AI is not just a tool for cost reduction; it's also a catalyst for creative exploration. By handling some of the more technical aspects of music production, such as mastering audio tracks, AI allows artists to focus on the creative side of their projects. According to Rare Connections, 60% of musicians use AI to some extent in their music-making process, whether for mastering, generating artwork, or composing.

Vocal Diversity and Innovation

The music industry is experiencing a transformation with the advent of AI-generated vocals. Producers now have the capability to experiment with a variety of vocal styles and textures that may have been out of reach due to logistical or financial constraints. This innovation is not only expanding the palette of sounds available to producers but also pushing the boundaries of what can be achieved musically.

Cons of AI in Music Production

AI's foray into music production isn't without its detractors. Some worry about the implications of synthetic voices on the authenticity and quality of music. The challenge lies in achieving a balance between the efficiency and innovation that AI brings and the artistry and emotion conveyed by human performance.

Potential Quality Concerns

While AI-generated vocals can mimic the human voice with increasing precision, there remains a debate over whether they can fully capture the nuanced expressions that a real singer brings to a performance. AI relies on deep learning to create synthetic speech that mimics the human voice, yet some argue that it may lack the subtle emotional inflections that come naturally to a live performer.

Ethical and Artistic Considerations

The use of AI in music production also raises ethical questions about originality and the potential devaluation of human musicianship. The idea that a machine could replicate or even outperform the creative output of a person is a contentious issue, stirring up concerns about the future role of artists and the definition of artistry in the age of machines.

Producer Sentiments and Skepticism

Despite the opportunities presented by AI, not all music producers are on board with this technological revolution. A survey revealed that 17.3% of producers have negative perceptions of AI in music production, while a significant portion, 47.9%, remain neutral. This indicates a level of uncertainty or hesitation within the industry, signaling that the full acceptance of AI in music production is still a work in progress.

Music generation

Music generation is notably one of the earliest applications for programmatic technology to enter public and recreational use. The synthesizer, looping machine, and beat pads are all examples of how basic programming could be implemented to create novel music, and this has only grown in versatility and applicability.

Modern production employs complex software to make minute adjustments to physical recordings to achieve the perfect sonic qualities we all associate with recorded tracks. Even further than that, there are plenty of technologies, like Ableton or Logic Pro X, that have been around for a long time that let users directly create music themselves - with nothing but a laptop!

Machine and Deep Learning attempts at music generation have achieved mixed results in the past. Projects from large companies like Google have produced notable work in this field, like their MusicLM project, but the trained models have largely remained restricted to internal use only.

The Rise of AI in Music Production

The intersection of artificial intelligence and music production has been a hot topic for debate in recent years. With the rapid advancement of technology, AI has infiltrated the realm of creative arts, particularly music, and has been met with both enthusiasm and skepticism.

The music industry is witnessing a significant shift towards the integration of AI in various production processes. A study by the music distribution company Ditto discovered that almost 60 percent of artists are already incorporating AI into their music projects. This reflects a growing trend where the tools once reserved for the technologically elite are now accessible to a wider range of creators.

Future predictions suggest that AI will claim up to 50% of the music industry market by 2030. The AI music generation market alone is expected to reach a valuation of $1.10 billion by 2027, growing at an impressive CAGR of 41.89%. This growth is indicative of the transformative impact AI is having on the industry.

Voice cloning technology, a subset of AI applications, has also seen a rise, becoming more powerful and prevalent across different sectors including music. The technology's ability to generate vocals that are indistinguishable from human singers has unlocked new frontiers for producers and artists alike. Notably, the collaboration between Google and Universal in offering artist voice licensing for AI-generated music signifies a major step forward in this domain.

Why Object to GenAI Music Creation?

I asked the Perplexity app what might motivate someone to make a post that reflects in a negative way and this is the response I got:

The message you referenced reflects a strong emotional response towards AI-generated music, likely driven by a combination of factors:

- Artistic Integrity: The sender may believe that music should be created by humans, valuing the emotional and creative process involved. They might see AI-generated music as lacking authenticity and undermining the hard work and creativity of traditional musicians.

- Fear of Devaluation: There is a concern that AI music could devalue human-created music by flooding the market with easily produced content, potentially reducing the demand for human musicians.

- Economic Threat: The sender might perceive AI music as a threat to the livelihood of musicians, fearing that it could replace human artists in commercial settings where cost-cutting is prioritized.

- Cultural Resistance: The message may stem from a cultural resistance to technological changes in the music industry, where traditionalists view AI as a novelty or gimmick rather than a legitimate tool for music creation.

Overall, the motivation behind such a message likely involves a mix of protecting artistic values, economic concerns, and resistance to technological change in the music industry.

Sources of Discussions

- r/AI_Music - Reddit https://www.reddit.com/r/AI_Music/

- r/musicians - AI music discussion - Reddit https://www.reddit.com/r/musicians/comments/1cz0zkj/ai_music_discussion/

- A.I Music Is A Hot Topic. Is A.I Music For The Better Or Worse? - Reddit https://www.reddit.com/r/ArtificialInteligence/comments/1cj2319/ai_music_is_a_hot_topic_is_ai_music_for_the/

- What are your thoughts on AI generated music? : r/indieheads - Reddit https://www.reddit.com/r/indieheads/comments/1dg4hg0/ai_music_today_being_new_music_friday_im_seeing_a/

- AI Music has gotten TOO real now... - Reddit https://www.reddit.com/r/Music/comments/1b9upic/ai_music_has_gotten_too_real_now/

- The future of AI-generated music : r/musicians - Reddit https://www.reddit.com/r/musicians/comments/1cphskj/the_future_of_aigenerated_music/

- ai music generator is scary : r/musicproduction - Reddit https://www.reddit.com/r/musicproduction/comments/1c8g6az/ai_music_generator_is_scary/

- Interesting AI Music discussion - Reddit https://www.reddit.com/r/Music/comments/1dh94vk/interesting_ai_music_discussion/

- Unlimited Song Generation

- Customization Options

- Real-Time Collaboration and Sharing

- Real-Time Feedback

- Copyright Ownership and Royalty-free music

- Limited Instructional Resources

Unlimited Song Generation

…unlimited or not?

Customization Options

Do these tools allow adjustments in tempo, key, chord progression, volume, panning, and melody editing for crafting unique melodies?

Real-Time Collaboration and Sharing

Do these tools enable real-time collaboration and easy composition export in formats like MIDI, MP3, and WAV?

Real-Time Feedback

Can you make adjustments, with a provided live preview, allowing you to see the transformation of your music in real time?

Copyright Ownership and Royalty-free music

Most of these tools retain copyright ownership of works produced, so how does this affect the output if used by content producers, music producers or live performers?

Limited Instructional Resources

There is a lack of comprehensive instructional resources for utilizing features effectively. Is this a barrier to adoption by both novice and experienced music producers?

Producers' Perspectives

The Authenticity Debate

At the heart of the conversation about AI in music is the question of authenticity. Can a track mastered by AI convey the same emotional depth as one refined by human ears? While 22% of music producers have embraced AI for mastering, others worry that the human touch may be lost in translation. The subtleties of a piece of music—its dynamics, timbre, and emotional resonance—have traditionally been shaped by the artist's intuition and experience. It remains to be seen whether AI can truly replicate these human qualities.

The Emotional Connection

Music is an art form that thrives on connection—between the artist and their creation, and between the creation and the listener. AI-generated vocals and compositions may be technically proficient, but some argue that they lack the soul of human-generated content. The complexity of human emotion, often conveyed through music, could be diminished if AI cannot capture the same depth of feeling.

Job Security for Musicians

There's also the concern for job security among musicians and session singers. If AI can produce vocals and compositions that are on par with those of humans, what does that mean for the livelihoods of these artists? The music industry has long been a competitive space, and the introduction of AI could exacerbate this, potentially reducing the demand for human performers.

Ethical Implications

Beyond the practical considerations, there are ethical implications to consider. The use of AI to generate music raises questions about copyright and ownership. Who owns a piece of music created by AI? And what rights do session singers or voice actors have when their voices are cloned? These are questions that the industry will need to navigate carefully.

The Human Element

Despite these concerns, there's a strong argument to be made for the human element in music production. Music is not just about sound; it's about storytelling, expression, and the human experience. While AI can enhance the technical aspects of production, it may never replace the creativity and emotional input that humans bring to the process.

AI in music production offers a wealth of possibilities for enhancing creativity, reducing costs, and democratizing the music-making process. At the same time, it presents challenges that must be addressed with care and consideration. For producers, the advent of AI means adapting to change, embracing new tools, and finding ways to maintain the human element that is at the heart of great music. As we move forward, the music industry will undoubtedly continue to evolve, and with it, the role of AI in shaping the sounds of the future.

The Impact of Music on Learning

Music has a profound impact on the process of learning, especially in STEM (Science, Technology, Engineering, and Mathematics) fields.

Enhanced Focus and Productivity

Music can help individuals concentrate better, leading to increased focus and productivity while studying complex STEM concepts. It can create a conducive learning environment by reducing distractions and enhancing cognitive abilities.

Memory Enhancement

Certain types of music, such as classical or ambient sounds, have been shown to improve memory retention. This can be particularly beneficial when trying to internalize and recall vast amounts of information typically encountered in STEM subjects.

Creativity and Problem-Solving Skills

Music stimulates the creative centers of the brain, fostering innovative thinking and problem-solving skills essential in STEM fields. By engaging with music, individuals can develop a more holistic approach to tackling challenges and exploring new perspectives.

Stress Reduction

The emotional impact of music can help alleviate stress and anxiety, creating a more relaxed learning environment conducive to absorbing complex STEM theories and applications. Reduced stress levels can lead to better cognitive functioning and information processing.

Mood Regulation

Music has the power to regulate mood and emotions, which can significantly impact one's overall learning experience. By listening to uplifting or calming music, individuals can cultivate a positive mindset that enhances their ability to engage with STEM materials effectively.

The rise of focus music

This genre is experiencing an upswing, partly because it improves productivity.

Many of us were using focus music long before it had an official name or any trending playlists. Jazz and downtempo have been my go-tos for tuning out distractions and centering on the task at hand since my college days. Today, more people are gravitating toward this music and its ability to boost concentration—no question that it’s going mainstream. Focus music can be defined as anything that helps you pay attention, reduce distractions, and maintain productivity.

As a society, we have never been more distracted. According to the American Psychological Association, 86% of Americans say they constantly or frequently check their emails, texts, and social media accounts, leading to higher stress levels. A University of California, Irvine study found that it takes people about 23 minutes on average to return to their task after an interruption, highlighting the negative impact of digital interruptions.

To help combat the endless list of technological distractions, people are turning to music. Many stats showcase the ascent of the focus genre over the last few years. Spotify shared a 50% increase in listening time for focus playlists in their 2023 Wrapped report and Pandora had a 47% increase in focus music stations created in 2023 compared to the prior year.

WHY IS THIS MUSIC GROWING SO RAPIDLY?

The trend is not due to demographic changes—as the data shows, the interest is not confined to a single age group. We do know that the pandemic and the shift to working from home certainly play a part. The pandemic created a perfect storm of circumstances that allowed focus music to flourish, meeting the need to be more centered during challenging times. The music resonates with people who struggle to concentrate while studying or working from home with multiple stressors and disruptions.

The genre was also well aligned for musicians who weren’t able to get into studios. As more people were tuning in to focus music, it paved the way for more artists to create it. The styles of music typically found in these playlists are instrumental and often lo-fi. Since there were no tours happening, there was no need to fill large venues and festivals with vocal-fueled anthems. Many artists found opportunities to experiment with these instrumental soundscapes from the comfort of a socially-distanced studio.

HOW DOES IT IMPACT OUR PRODUCTIVITY?

We know that sustained attention is required for performing creative and business tasks, but sustaining concentration on task-relevant information over an extended period of time is mentally rigorous. People tend to experience attention lapses that fall into two general camps: mind wandering (hypo-arousal) or external distraction (hyper-arousal). The power of focus music is its proven ability to sustain attention by reducing both types of lapses.

A 2020 study from Goldsmiths University of London “provides evidence for music’s ability to improve focused attention and performance—by increasing arousal to an intermediate level optimal for performance—and suggests that people can derive benefit from music listening while performing low-demanding tasks.”

Mike Savage, artist relations lead of a science-backed music catalog, works closely with producers to create effective focus music. He has a well-researched set of criteria he’s looking for when commissioning music. “One of the most important components is a stable rhythmic groove that keeps your mind engaged enough to be productive, but not so engaged that it is distracted from the task at hand. We look for repetitive rhythms and steady melodies that don’t demand too much attention, which can be counter-intuitive for artists, but really work in this context,” he shares.

WHY IS FOCUS MUSIC BEING EMBRACED BY MORE BRANDS AND BUSINESSES?

Wellness trends such as mindfulness, yoga, and functional medicine are gaining popularity as people across the globe fight burnout and anxiety. Brands in every sector are incorporating these trends into their offerings to stay relevant and appeal to health-conscious consumers. Recent examples include Whole Foods, which started offering wellness clubs and classes on topics like stress management, meditation, and holistic nutrition. Even Urban Outfitters now sells a range of products from supplements and health foods to yoga mats and focus/study music.

As consumers are becoming more aware of the importance of overall wellbeing, they seek tools for stress management and increased performance. Focus music is a readily available tool with no side effects and massive upside.

There are reasons to expect that listening to music in this genre will rise even faster in the coming years. HR departments are looking for new solutions to help their remote workers stay productive and connected; advocating for focus music playlists is an easy way for employers to help promote sustained attention and create a shared experience among their employees. Consumer streaming services will meet the rising demand for this music by expanding the development and promotion of focus music playlists, further increasing consumption. As new research studies validate music’s effectiveness in enhancing concentration levels, the genre will become more refined and gain even more momentum to help combat the scourge of digital distractions.